NI ADAS Sensor Emulation

SNAPSHOT

Fall 2023: 8 weeks

Company: NI

Users: Internal ADAS engineers

Opportunity: How might we enable NI engineers to emulate third-party sensors more quickly?

Context: Sensor and bus emulation, a key part of NI’s ADAS (Advanced Driver Assistance Systems) Test offerings, can take internal teams weeks to solve and are a bottleneck to serving more customers.

Team: A Long Term Innovation (LTI) team focused on AI and ML applications for internal use cases, comprised of 3 engineers and 2 designers

My Role: Product Designer

Outcome: In about weeks, we designed an interface and a ML-based prototype that in a few minutes identifies register and I2C relationships with 98-100% accuracy, a process that previously took weeks of manual work

Latest Concepts:

The Process

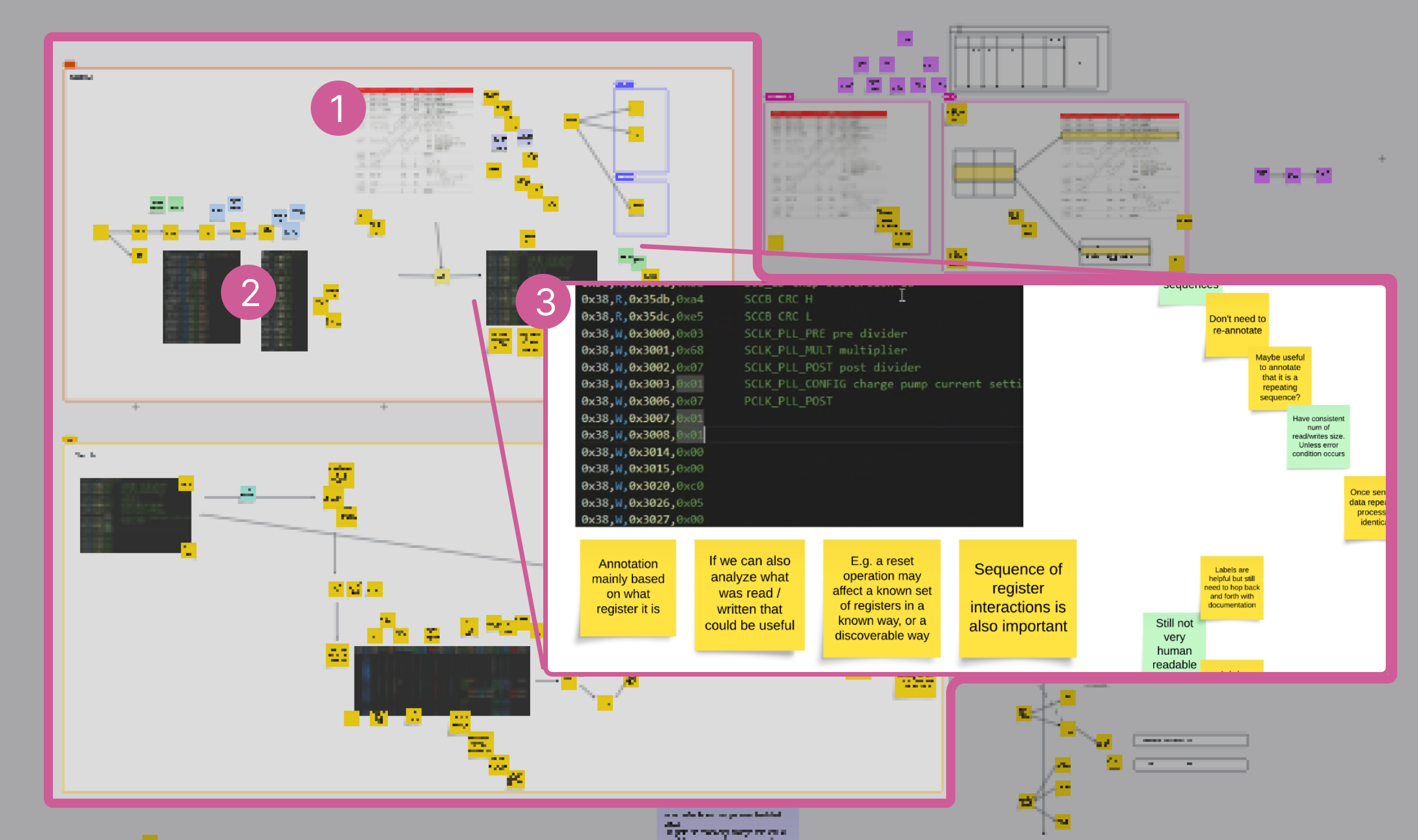

Weekly collaborative sessions via Zoom & Lucidspark canvas (annotated below) with the LTI team, users and stakeholders where we…

Mapped workflows and tools (1,2,3)

Early sessions focused on understanding the role of sensor emulation in NI’s ADAS offering, and the references, tools and workflows of multiple users

Mapped the human processes (4,5)

Next, and again in collaboration with our users, we mapped the human reasons, intents, and challenges engineers face while working on sensor emulation as distilled from existing tools and workflows as possible.

A key collaborative session focused on the whys tucked within the technical whats of the various engineering tools and workflows we initially mapped. In this session we focused on the details of eventual sensor emulation (4) and the human thought-processes behind the workflows and tools (5).

Collaborative Brainstorming (6,7,8)

During our brainstorming session, I sketched a top-down solution (7 at left) and our team’s data scientist imagined a more back-and-forth experience (8 at left).

Neither concept resonated completely with our users, but each drove meaningful discussions around what is and isn’t helpful, and why.

Inclined towards experimenting with working prototypes, the team isolated the document-ingestion workflow (6 at left) as an area of engineering priority while I focused on iterating on the design and experience.

UX Concept (below):

Concept drove feedback, an ML prototype, and confidence (9,10)

Although the details needed refinement, the concept of an ML-based, interactive interface that identifies likely I2C - register relationships and enables multiple ways for engineers to explore the data resonated with our users.

Sharing the UX concept early enabled our data scientist to begin experimenting and prototying to see how difficult this could be. His early prototypes were promising, quickly identifying I2C - register relationships with over 98% accuracy (9 at right).

With the added confidence of an accurate ML prototype, the team continued to refine the digital interface design.

Feedback refined design (below):

Next Steps:

As a team, we continued to refine the concept weekly with a growing number of users, and I shared concepts for feedback on our growing Airtable canvas. I also shared my working Figma file with our squad’s FE-focused engineer as well as links to the Flexoki color system I used.

In early November 2023, NI, which had recently become part of Emerson, laid off much of their UX team, including myself.